Why?

The process of testing a service running on the OpenShift platform is a challenge especially where Kubernetes is not implemented and IT support is lacking. Therefore, I decided to automate the process of installing OpenShift cluster and services that I deal with on a daily basis.

OpenShift installation is very well documented and virtually fully automated for public cloud providers and for VMWare VSphere. With a few commands and well-described preparation steps, we are able to get a cluster up in a few hours.

However, from experience I see the need for implementation in bare-metal architecture mostly understood as dedicated VM resources on the hypervisor.

It concerns the situation, when the team responsible for solution evaluation is limited by internal processes and forcing VSphere environment reconfiguration only for testing purposes or getting carte-blanche for resources in the cloud is a significant obstacle in efficient implementation. In such a situation, the easiest solution is to define hardware requirements in the form of a list of virtual machines and install the whole environment from scratch. And here we face a much more demanding task, the need to configure supporting services (DNS, WebServer, NTP, Load Balancer), installing tools, creating configuration files and monitoring the entire process. Of course, everything can be done, but the test should be a transparent process focused on the target solution instead of being associated with tedious platform preparation.

That’s why I took on the task of automating the installation of OpenShift and Guardium Insights with the goal of reducing the time spent on this process and making it much simpler for people who don’t have much experience in network and OS services and Kubernetes.

Table of content

- OpenShift cluster infrastructure

- Openshift installation architecture

- Automatic OCP installation tools and requirements

- Bastion installation

- Proto-bastion for air-gapped installation

- gi-runner download

- Air-gap installation archives preparation

- Cluster nodes setup

- init.sh – gathering cluster info

- Playbook 1 – completing the bastion configuration process

- Playbook 2 – installation services setup

- Cluster boot phase

- Playbook 3 – OpenShift installation finalization

- Playbook 4 – ICS installation

- Playbook 5 – GI installation

- Playbook 50 – OpenLDAP on bastion

- Summary

OpenShift cluster architecture

The architecture of an OpenShift cluster is directly dependent on the services we will be placing on it. In the case of test environments, the main goal will be to reduce the requirements to a reasonable minimum to give possibly good performance. Personally, I never propose minimum requirements, but try to balance them so as to have space for possible peaks in activity or additional requirements that may arise.

When starting the automation project, I had to make some hard assumptions that would allow me to define the scope of functionality with a relatively quick ability to implement it. One of them was to determine what versions of OpenShift (OCP) would be supported. Due to the existing Red Hat support, the decision was obvious and versions from 4.6 upwards are supported.

Automation should allow you to define different cluster architectures, which I will briefly discuss below.

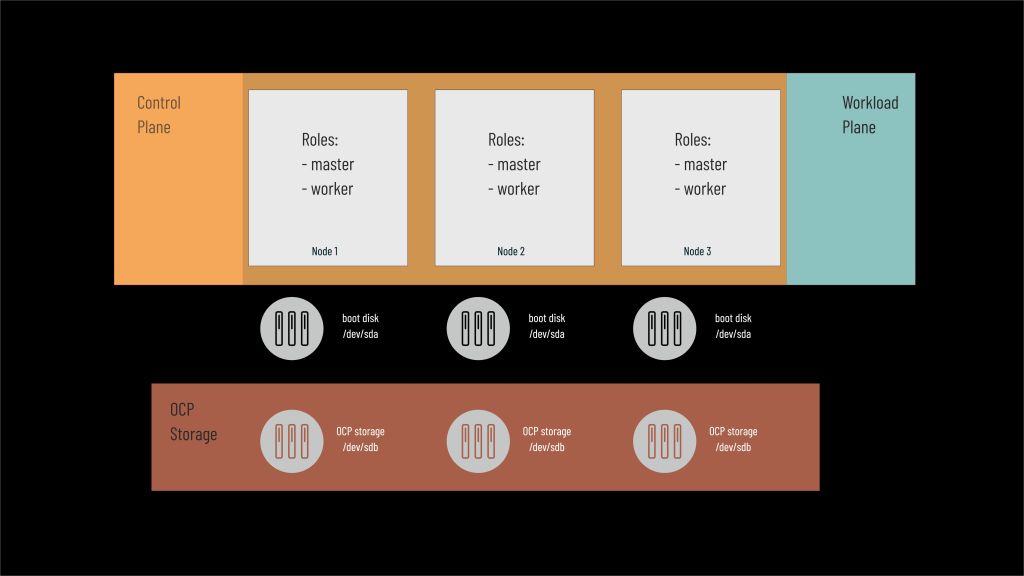

3 masters only

It is the smallest supported OCP cluster consisting of only three nodes, each of which performs both master (control plane) and worker (workload plane) functions. And here the issue of disk resources cannot be overlooked.

OCP storage

Each node is equipped with a master disk that is responsible for booting the CoreOS operating system and its partitions. In most cases, its size is defined as 120-150 GB regardless of the function it plays in the cluster. However, running services require disk space where data, logs and configuration files will be placed – they cannot be a part of containers because they are ephemeral and return to their initial state every time they are restarted. Hence, we need to provide virtualized disk space for the cluster to store persistent data.

Due to the plethora of options, I had to limit myself to only two options during automation:

- OpenShift Storage Cluster (OCS)

- rook-ceph

The first is a de-facto monetized version of the second.

For production use, OCS is recommended because it is supported by RedHat, but for testing purposes I recommend the original implementation because it is less demanding in terms of memory and CPU.

OCS installation is available through the system’s built-in Operator Lifecycle Manager (OLM), in the case of rook-ceph the installation is done through an operator independent from OLM.

In the future, I may extend the ability to use other disk virtualizers, such as PortWorx.

So in the architecture with only three nodes, each node is equipped with two disks, the second of which will be used for storage virtualization (OCP Storage).

Both OCS and rook-ceph must have at least 3 disks of the same size connected to 3 different workers to ensure reliability and high performance.

Their size should be adapted to the needs of our services, but we must remember that depending on the chosen protection mechanism, from 33 to 66 percent of the space is used to store redundant data.

With the above in mind, we must remember that the total physical disk space available for OCP Storage is not equal to the space that our services can consume, and we should properly plan their size.

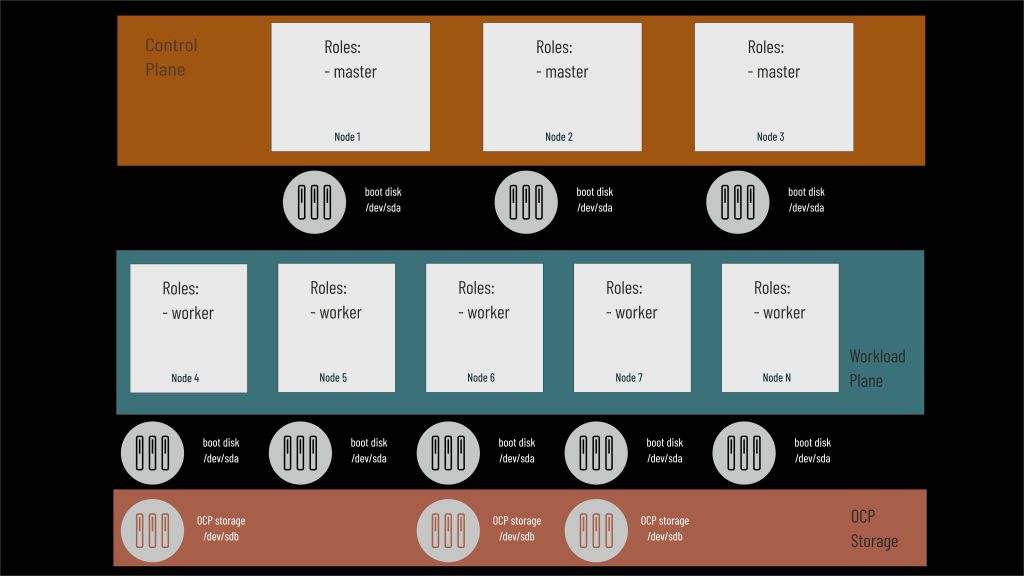

Three or more workers

This is the most popular architecture in which we have a separate Control Plane with three master nodes and a group of workers. There must be at least two but practically three nodes are needed to configure the cluster storage. Standard OCS configuration requires that the number of nodes sharing disks is a multiple of 3 and such a limitation exists in the automation process.

Separated storage plane

Recommended architecture for a large application environment where 3, 6, 9 or more nodes are dedicated solely for the purpose of managing cluster disk space (Storage Plane) and services run on any number (at least 2) of workers. Nodes in the Storage Plane through the tainting mechanism prevent the scalability of other services within them. This allows for the best efficiency in accessing persistent data.

One node is not supported but doable

CodeReady Containers are RedHat’s answer to the need for a simple and lightweight system to learn and develop small applications for the OpenShift platform hence in OCP 4.x the single node architecture is not supported but is possible. Therefore, automation allows such a cluster to be created with the explicit assumption of its strictly evaluative value. It should not be used for true solution testing. In such architecture it is not possible to use OCS and the only storage management option is rook-ceph.

Openshift installation architecture

After selecting the OCP cluster architecture, it’s time to determine how to automatically install it.

The key information is direct access to resources on the Internet where image repositories are located. Due to this division, OCP installation can be considered in three variants:

- with access to the Internet

- access via proxy

- without access to the Internet (air-gapped, offline, disconnected)

With access to the Internet

Preferred approach for installations to easily upgrade the cluster and services. However, be aware that most companies’ Internet access policies filter access and make sure that certain URL’s are not blocked.

In the table below I’ve compiled references that are necessary to install OCP and Guardium Insights.

| URL | Element | |

| 1 | github.com/zbychfishcodeload.github.com | gi-runner access |

| 2 | Fedora mirror list contains hundreds servers, to limit scope of URL’s to open review Fedora documentationmirrors.fedoraproject.orgmirrors.rit.edu | Fedora packages and updates |

| 3 | galaxy.ansible.com | Ansible galaxy extensions |

| 4 | github.com/poseidon/matchbox/* | matchbox |

| 5 | pypi.org | Python modules |

| 6 | mirror.openshift.com | RedHat images |

| 7 | quay.ioregistry.redhat.ioinfogw.api.openshift.com | OCP installation |

| 8 | registry-1.docker.io | rook-ceph, ICS |

| 9 | ocsp.digicert.comregistry.hub.docker.com | Guardium Insights |

Access via proxy

The most common approach in Internet access for production services where external data is retrieved indirectly via web server, FTP in the DMZ and made available for internal resources. From the point of view of installation and operation of OCP cluster it requires only additional configuration of nodes. Usually, proxy allows access only to strictly defined URL’s and therefore their reachiness should be confirmed before installation.

Air-gapped environment

The most challenging approach, where access to resources via the Internet is prohibited. Especially during testing may be the only possible approach due to security requirements. In this situation it is necessary to prepare in advance a copy of all tools, installation files and container images that may be necessary during installation and copy them to an environment without Internet access and then make them available in a form understandable for the whole installation process.

The example installation described in the following section will be based on this particular case as the most complicated and requiring the most steps during automation.

Automatic OCP installation tools and requirements

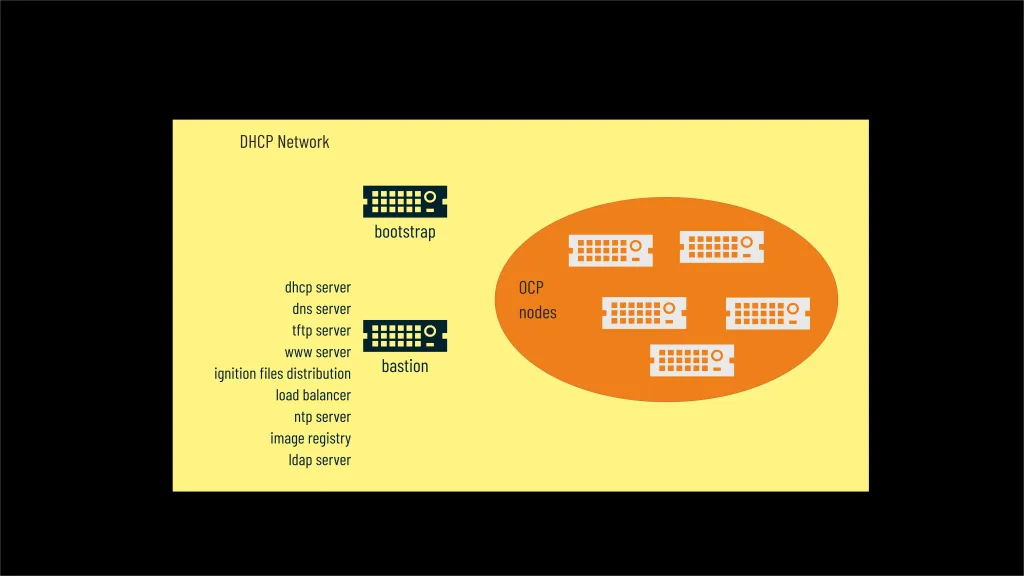

Depending on the installation method, OCP installation requires several additional services and an infrastructure to automate the procedure.

First of all, apart from the cluster nodes, an additional machine controlling the installation process and called bootstrap is required. Once the cluster is up and running, it will be stopped and can be removed from the infrastructure.

The automation of the installation process is inherent in the network-based installation of the CoreOS operating system on the cluster nodes and bootstrap machine. To simplify the procedure I assume that all machines will be in the same subnet isolated from other DHCP.

All network services will run on a dedicated machine called bastion. It will perform the administrative and controlling functions for our test environment and also provide a whole range of services necessary for the proper functioning of our environment and in particular these will be:

- DHCP server – OCP cluster can use fixed or dynamic IP addressing, but in the case of CoreOS network installation the natural solution is to use DHCP, which will be configured on the bastion. DHCP will also be responsible for configuring machine booting using the PXE protocol. In my case I am using dnsmasq with built-in DHCP capability. Once again, all machines must be isolated from other DHCP servers so that they are properly configured by the local server located on the bastion.

- DNS server – in the case of a test installation, there is no need to register names on the corporate server and in the case of automation, I use dnsmasq on bastion with simultaneous configuration of redirecting requests for out-of-cluster names to a specified local or public nameserver (DNS forwarding).

- TFTP server – this simple file sharing protocol is required during the initial boot phase of nodes to share boot files. I’m using dnsmasq again for this purpose which has this feature built in.

- WWW server – CoreOS installation process requires access to operating system images made available using HTTP. I’m using matchbox tool for this purpose, which at the same time provides a mechanism for distributing cluster OCP configuration files (ignition files) based on MAC address.

- Load balancer – access to the OCP cluster applications and APIs requires, in a distributed environment, a load balancer running on layer 4 of the TCP/IP stack and controlling the service state. In the case of the test environment, I use HA Proxy.

- NTP server – OCP internally uses Zulu time and proper synchronization between nodes is necessary. Additionally, to ensure that the certificates on the machine on which we generate the cluster initialization files are correct we must have a properly configured time zone on it so that there is no difference in relative time between it and the cluster. Therefore, the automation process allows you to choose to use a local NTP server located on the bastion (chronyd) or to refer to an already existing one. In the case of an offline installation, it must be located on the corporate network.

- Image registry – a local one is necessary for air-gapped installations. In the automation process, it resides on the bastion and must be available at all times when the environment is running.

- LDAP server – Guardium Insights requires access to a directory service that will authenticate users of the solution. Optionally, the automation process can install and configure an OpenLDAP instance on bastion and connect it to our application environment.

I have decided that the operating system on bastion must be Fedora. Previously I was using CentOS but Redhat’s decision to change their approach and move to an upstream based release disqualifies this approach when installing in a client environment.

All machines in the cluster and bootstrap must be configured to boot using the BIOS. I also plan to support UEFI in the future. To do this, you will need to configure the machine firmware properly or set this option for virtual machines.

OpenShift supports virtualized hardware up to version 13 (equivalent to ESX 6.5). If installing on a VMWare platform, ensure that all nodes are configured with this hardware specification.

Bastion installation

As mentioned earlier, bastion is a key element of the infrastructure that allows us to manage the cluster, automate installation and provide services in a test environment where it is time-consuming to wait for access to them.

For the automation presented here, bastion requires a Fedora operating system with a minimum of 2 cores and 8 GB RAM. For offline installation, a large disk (minimum 250 GB) is required to store the installation files and portable image registry. For online or proxy installations, a 30 GB disk is sufficient.

If the installation takes place without Internet access, do not install any additional software packages and do not update the system before installation – the procedure will perform these actions automatically.

The Fedora Server 3.4 installation process is outlined here.

Proto-bastion for air-gapped installation

Installation in an air-gapped environment requires the downloads in advance installation tools and a number of images to install OpenShift, operators, and applications that will be hosted on the cluster (Guardium Insights). This early stage must be done outside the target environment on a machine with Internet access. The collected data archives must then be copied to the bastion in an isolated installation environment.

In the case of automating this process, in addition to the elements strictly related to OpenShift, we must also ensure that the installation procedure is equipped with all additional development tools (Ansible, python, command line tools) and additional services (dnsmasq, matchbox, haproxy, openldap).

Ensuring that software packages collected on one machine are installable on another requires consistency of system libraries since most binaries are linked dynamically. Therefore, the assumption is that the workstation on which we will build the archives will be identical to the target bastion and that’s why I call it a proto-bastion.

In other words, the proto-bastion must be twinned with the target bastion within the installed operating system version, system kernel, and update level. This is why I emphasized earlier that the bastion should not be updated and the update procedure will be part of the cluster’s automatic installation process.

This means that the proto-bastion must also be based on the Fedora distribution but requires fewer resources – 1 core and 4 GB RAM will be sufficient. Disk requirements are similar to bastion and it should have at least 250 GB of storage and we install it exactly the same way.

gi-runner download

A collection of scripts and playbooks to automate the installation of OpenShift and Guardium Insights are available in the GitHub repository – https://github.com/zbychfish/gi-runner.git

I recommend using the main branch as it is always the last fully tested version.

In case of online installation we install it on bastion with commands:

dnf -y install git

git clone https://github.com/zbychfish/gi-runner.gitIf using a web proxy, the easiest way to download gi-runner is to use wget. For this you need to execute the commands:

wget -e use_proxy=yes -e https_proxy=<proxy_ip>:<proxy_port> https://github.com/zbychfish/gi-runner/archive/refs/heads/main.zip

unzip main.zipwhere proxy_ip and proxy_port – point your Proxy server IP address and port.

For offline installation, copy to bastion from proto-bastion the gi-runner.zip archive located in the air-gap directory and unzip it with the command:

unzip gi-runner.zipThe automation process assumes using root accounts on the proto-bastion and bastion machines for the installation. In the examples, the gi-runner archive is located in the user’s home directory (/root), but another location on the file system may be used for this purpose.

Here a short video showing how to download gi-runner in all situations (online, proxy and offline).

Air-gap installation archives preparation

This chapter focuses on creating installation archives for offline installations. It may be omitted if you have internet access.

All scripts discussed in this section are located in the prepare-scripts subdirectory of the gi-runner project you just downloaded. Depending on the purpose of the installation, you will need to run several of them.

prepare-air-gap-os-files.sh

Run the prepare-air-gap-os-files.sh script on the proto-bastion, which must be an exact copy of the operating system on the target bastion machine.

prepare-scripts/prepare-air-gap-os-files.shIt performs the following actions:

- downloads operating system updates

- updates the proto-bastion

- downloads additional software packages required for the automation process (e.g. ansible, haproxy, openldap, dnsmasq)

- downloads additional Perl modules

- downloads additional Galaxy Perl modules

- downloads the

gi-runnerarchive - packages the collected files into archives and places them in the

air-gapdirectory

Two files appear in the air-gap directory:

- gi-runner.zip

- os-Fedora_release_-.tar

where the date represents the day the archive was created and also indicates the date of the system update.

If the script ends with an error message, it must be restarted after the operating system has been restored to its initial state. I therefore recommend to create a snapshot of the proto-bastion before running this script.

The way the script works is shown here.

prepare-air-gap-coreos.sh

prepare-scripts/prepare-air-gap-coreos.shThe prepare-air-coreos.sh script prepares an archive to install an OpenShift cluster, specifically:

- installs a temporary image registry on the proto-bastion

- downloads the files to boot and install CoreOS on the cluster nodes

- downloads the installation tools (openshift-client, openshift-install, opm, matchbox)

- downloads openshift container images from

quay.io

The script is interactive and requires three pieces of information:

- version of OpenShift you want to install (e.g. 4.6.40, 4.7.3)

- RedHat pull secret – a set of credentials that allows access to the RedHat repositories (https://console.redhat.com/openshift/install/pull-secret).

- email address associated with your RedHat account

If the process fails (usually due to a temporary lack of downloadable resources), just run the script again.

An example use of the script is shown here.

As a result, the coreos-registry-<version>.tar archive is created in the air-gap directory, where <version> denotes the Openshift version for which the data was prepared.

prepare-air-gap-olm.sh

prepare-scripts/prepare-air-gap-olm.shRunning an application in a Kubernetes environment involves controlling the process of running services (its resources, instances, communications). The preferred mechanism in OpenShift is to use operators for this purpose, the multitude of which forced the creation of a central mechanism for their distribution through the Operator Lifecycle Manager (OLM).

Currently, operators are grouped into four groups: Redhat, Certified, Marketplace and Community operators.

If we wanted to copy all of them to our offline installation we would need several terabytes. Therefore, when starting to build an OLM archive we need to decide beforehand which operators we will need.

The default settings download:

- Redhat operators:

local-storage-operator, ocs-operator - Certified operators:

portworx-certified - Marketplace operators:

mongodb-enterprise-rhmp - Community operators:

portworx-essentials

For OCS installation as an OpenShift storage the local-storage and ocs operators must be mirrored in this process.

To override default operator list you can define for environment variables just before script execution:

export REDHAT_OPERATORS_OVERRIDE="<operator1>,<operator2>,...,<operatorN>"

export CERTIFIED_OPERATORS_OVERRIDE="<operator1>,<operator2>,...,<operatorN>"

export MARKETPLACE_OPERATORS_OVERRIDE="<operator1>,<operator2>,...,<operatorN>"

export COMMUNITY_OPERATORS_OVERRIDE="<operator1>,<operator2>,...,<operatorN>" where operator list cannot be empty and operators are comma separated without spaces.

The script is interactive and requires information about:

- version of OpenShift you want to install (e.g. 4.6.40, 4.7.3)

- RedHat pull secret – a set of credentials that allows access to the quay.io repository (https://console.redhat.com/openshift/install/pull-secret).

- RedHat account name

- RedHat account password

Marketplace hub operators are available only when the RedHat account has the correct permissions. The script does not check these settings and therefore errors are ignored when mirroring them.

The size of the archive is unpredictable due to the list of operators and can vary between a few and hundreds of gigabytes.

An example of script use can be reviewed here.

prepare-air-gap-rook.sh

prepare-scripts/prepare-air-gap-rook.sh

Another script is related to rook-ceph support as the second storage option that the automation process in gi-runner supports.

This time without any input information, images for two versions are copied. The first, a very old one still effectively supports deployment on a single node, the second one will be used for other cluster architectures.

The size of the archive is about 1.5 GB. An example of rook script use can be reviewed here.

prepare-air-gap-ics.sh

prepare-scripts/prepare-air-gap-ics.sh

Mirroring IBM Common Services (ICS) images is not required to install OpenShift, but only if you install software that uses its services. Very often, software that depends on ICS contains a reference directly in CASE files to ICS and the process of downloading ICS images is included when preparing the archive for this solution. This is the situation in Guardium Insights, for example. Therefore it only makes sense to use this script when you need to install a specific version of ICS independently.

Script prepares an archive to install an ICS cluster, specifically:

- installs a temporary image registry on the proto-bastion

- downloads the installation and mirroring tools (cloudctl, openshift-install, skopeo)

- downloads ICS container images from repositories

The script is interactive and requires input:

- RedHat account name

- RedHar account password

- selection of ICS version (list of currently supported by

gi-runneris displayed)

The size of the archive depends on the ICS version but it will not be smaller than 15 GB.

How to use the script can be found here.

prepare-air-gap-gi.sh

prepare-scripts/prepare-air-gap-gi.sh

We proceed to create the Guardium Insights images archive. This process will also download all additional and required components during installation including:

- IBM Common Services

- Redis operator

- DB2 operator

This archive must be prepared only for Guardium Insights installation. The standard OCP cluster deployment does not require it.

The images are located in the cpr.io repository and access to part of them requires authentication, so you need to enter the IBM Cloud container key (https://myibm.ibm.com/products-services/containerlibrary).

The process of downloading images may take up to several hours depending on the quality of your Internet connection.

With long connections there are sometimes cases of broken session which results in an error in mirroring operation. That’s why there is a ‘repeat‘ parameter in the script which will continue the download process without having to reinitialize it on restart.

prepare-scripts/prepare-air-gap-gi.sh repeat

The script is interactive and requires input:

- IBM Cloud container key

- selection of GI version (list of currently supported by

gi-runneris displayed)

The size of the archive exceeds 60 GB and we need to provide the appropriate double disk space to archive the data.

Example of script use is here.

List of archives demanded for air-gap installation:

| prepare script | archive name | OCP | ICS | GI |

prepare-air-gap-os-files.sh | os-Fedora_release_<release>-.tar | X | X | X |

prepare-air-gap-coreos.sh | coreos-registry-<OCP_version>.tar | X | X | X |

prepare-air-gap-olm.sh | olm-registry-<OCP_major_version>-<date>.tar | X | X | X |

prepare-air-gap-rook.sh | rook-registry-<date>.tar | X | X | X |

prepare-air-gap-ics.sh | ics_registry-<ICS_version>.tar | X | ||

prepare-air-gap-gi.sh | gi_registry-<GI_version>.tar | X |

Cluster nodes setup

With the installation archives prepared for the offline environment, we can take care of the infrastructure. In the subnet dedicated to the OCP cluster there is already installed Fedora VM acting as a bastion and identical at the operating system level to the proto-bastion used in the previous steps.

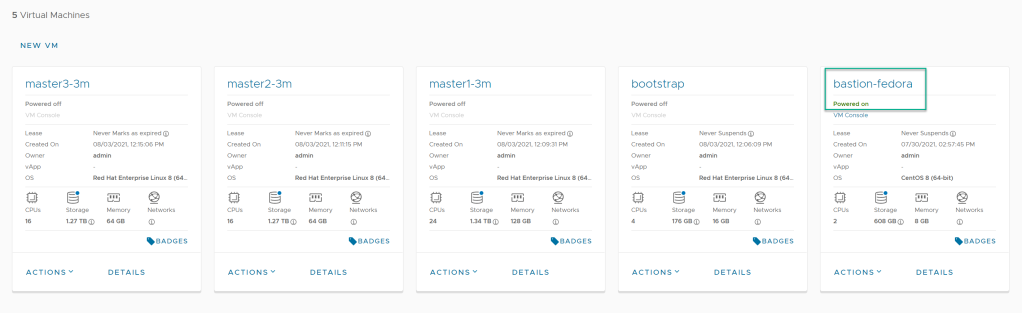

In addition to this four additional machine templates have been built: bootstrap and three cluster nodes.

Bootstrap is a temporary element and will be removed after OpenShift is installed, while the cluster nodes were matched to the planned infrastructure.

The three nodes means that we will place the workers on the same machines as the Control Plane.

The amount of vCPU and RAM can be adjusted to our needs for each node independently, taking into account the target system that we run on the cluster. In my case it will be Guardium Insights and that’s why one of the nodes is much more powerful because I’m going to place on it a database engine that collects data access events.

Since there will only be 3 nodes in the cluster each node has an additional disk (500 GB) that will be configured as virtual disk space for OCP controlled applications. The disks must be the same size.

The boot disks including boostrap must be 70-120 GB in size.

To properly assign IP addresses and determine the function of the nodes, we need to know the MAC address of each node before starting the installation. If it has not been assigned to the network interface it must be set manually.

We can now gather all the infrastructure information needed for the installation:

| Description | Environment variable | Value example |

| OCP subnet default gateway | GI_GATEWAY | 192.168.100.254 |

| DNS forwarder – which able to resolve machine names outside cluster scope. Point bastion itself if forwarder is not available – it will solve problem with the long ansible facts gathering. | GI_DNS_FORWARDER | 192.168.100.254 |

| Bastion IP address – if bastion has few network interface this IP should be assigned to NIC connected to the OCP subnet | GI_BASTION_IP | 192.168.100.100 |

| NTP server – IP address of internal NTP server. Installation procedure can deploy it on bastion – in this case points variable will be automatically set to bastion IP | GI_NTP_SRV | 192.168.30.12 |

| Bootstrap IP address – address IP which will be dynamically assigned to bootstrap node | GI_BOOTSTRAP_IP | 192.168.100.115 |

| Master nodes IP’s – comma separated list of Control Plane nodes | GI_NODE_IP | 192.168.100.111,192.168.100.112,192.168.100.113 |

| Bootstrap MAC address – hardware address of bootstrap NIC | GI_BOOTSTRAP_MAC_ADDRESS | 00:50:56:01:c8:ce |

| Master nodes MAC addresses – comma separated list of hardware addresses of Control Plane nodes, in this same order as provided for IP addresses | GI_NODE_MAC_ADDRESS | 00:50:56:01:c8:cf,00:50:56:01:c8:d0,00:50:56:01:c8:d1 |

| Local OCP cluster name for bootstrap node | GI_BOOTSTRAP_NAME | boot |

| Comma separated list of local OCP cluster names of master nodes, same order as IP addresses | GI_NODE_NAME | m1,m2,m3 |

| Local OCP cluster name for bastion | GI_BASTION_NAME | bastion |

Range of IP addresses served by DHCP server on bastion to server OCP nodes. Range should cover all adresses mention by variables GI_BOOTSTRAP_IP and GI_NODE_IP | GI_DHCP_RANGE_START | 192.168.100.111 |

OS name of cluster node NIC interface, in most situation it will be ens192 or ens33 | GI_NETWORK_INTERFACE | ens192 |

Logical name of boot disk on cluster nodes, in most situation it will be sda or nvme0n0 | GI_BOOT_DEVICE | sda |

Logical name of an additional disk for cluster storage, in most situation it will be sdb or nvme0n1 | GI_STORAGE_DEVICE | sdb |

| Size (measure in gigabytes) of an additional storage disk | GI_STORAGE_DEVICE_SIZE | 500 |

init.sh – gathering cluster info

With the prepared archives which have been moved bastion we can start the configuration phase of installing an OCP cluster.

It doesn’t matter where the archives are located (on mounted disk, shared using NFS) although by default the script will expect them to be in the $gi_runner_home/downloads directory.

The installation must be done using the root account on bastion. After unpacking the gi-runner.zip archive in the gi-runner-main directory you will find the init.sh script, which is responsible for gathering information about the installation architecture and in case of offline installation additionally:

- installs operating system updates

- supporting software packages

- additional modules for Ansible and Python

It will also configure the system time and access to the NTP service.init.sh is interactive and will result in a batch file that defines a set of environment variables controlling the entire OCP, ICS and GI installation process and start it by simple execution:

./init.sh

In addition to the variables listed in the table above for an air-gap installation, the following will be also defined:

| Description | Environment variable | Value example |

| OCP release, can be expressed directly by full version (i.e. 4.6.41, 4.7.13) or byselecting the latest release for major versions (4.6 or 4.7) | GI_OCP_RELEASE | 4.6.latest |

| OCP internet access, possible values – A (air-gapped), P (proxy), D (direct) | GI_INTERNET_ACCESS | A |

| OCP cluster domain, it should unique name in FQDN format, for test installation there is no reason to use public domains | GI_DOMAIN | ocp4.guardium.notes |

| One node installation architecture which should be used for real cases | GI_ONENODE | Y |

3 masters installation, if variable is set to N the OCP will be implemented with dedicated workers | GI_MASTER_ONLY | Y |

Cluster storage type where O means OCS and R is related to rook-ceph | GI_STORAGE_TYPE | O |

| For OCP cluster with workers we need to provide a list of its IP addresses as comma separated list. OCP requires minimum 2 workers. | GI_WORKER_IP | 192.168.100.121,192.168.100.122 |

| List of hardware MAC addresses a workers NIC interfaces, quantity and order must correspond to IP worker list | GI_WORKER_MAC_ADDRESS | 00:50:56:01:c8:d6,00:50:56:01:c8:d7 |

| Names of workers as a comma separated list | GI_WORKER_NAME | w1,w2 |

In case of cluster storage based on OCS we can dedicate separate nodes to focus only on disk management. In this case this variable must be set to Y. | GI_OCS_TAINTED | Y |

If OCS instance is to be isolated from the rest of the system functions, we need to specify IP addresses of thirty nodes that will be responsible for storag management. These nodes must of course be connected to an additional disk defined by the variable GI_STORAGE_DEVICE. Value must be a comma separated list. | GI_OCS_IP | 192.168.100.131,192.168.100.132,192.168,100,133 |

| List of OCS nodes network interface hardware addresses which order corresponds to IP addresses | GI_OCS_MAC_ADDRESS | 00:50:56:01:c8:d9,00:50:56:01:c8:da,d9,00:50:56:01:c8:db |

| Comma separated list of OCS node names in order corresponding to its IP addresses | GI_OCS_NAME | ocs1,ocs2,ocs3 |

Communication between pods uses its own network addressing and for this purpose, define the subnet used for this purpose. The default is to use the 10.128.x.x address space and unless it overlaps with the one used in the infrastructure there is no need to change it. | GI_OCP_CIDR | 10.128.0.0 |

Size of address space (defined as network mask) provided by variable GI_OCP_CIDR and assigned to each pod | GI_OCP_CIDR_MASK | 23 |

Variable defines IP address and port of Proxy server when GI_INTERNET_ACCESS is set to P. In other cases value is set to NO_PROXY | GI_PROXY_URL | NO_PROXY |

| OCP cluster administrator name added in the procedure using httpass authentication | GI_OCADMIN | ocp_admin |

Password of administrator defined by GI_OCADMIN variable | GI_OCADMIN_PWD | |

| Each execution of init.sh generates new SSH key stored in /root/.ssh/cluster_id_rsa which provide access to node using core account | GI_SSH_KEY | <ssh key body> |

| For non air-gap installation the container images are received from RedHat repositories and variable provides authentication information for them (RH pull secret) | GI_RHN_SECRET | <Red Hat pull secret> |

| Cluster configuration for existing cluster is store in config file and allows use administration tools like oc, kubectl. This variable sets static path to configuration file created by gi-runner OCP installation playbooks | KUBECONFIG | /root/<gi_runner_home_dir>/ocp/auth/kubeconfig |

Additional variables associated with the installation of IBM Common Services, Guardium Insights, and OpenLDAP will be discussed in the appropriate sections below.

The result of the init.sh script is a variables.sh file that will appear in the gi-runner home directory. It must be loaded into the current shell before running each playbook with the command:

. <gi_runner_home_dir>/variables.sh

An example of using the init.sh script for an offline installation and a cluster based on only three master nodes can be found here.

Playbook 1 – completing the bastion configuration process

Our bastion is now fully up and ready to running with playbooks that automate the installation of OpenShift.

The first one finalizes the operating system update operation and, in the case of an air-gap installation, unpacks the image archives.

If the update was related to the installation of a new kernel, a message about the need to restart the bastion will appear. In this case, you need to log in again, go to your gi-runner home directory, and reload the variables.sh.

Start the playbook with the command:

ansible-playbook playbooks/01-finalize-bastion-setup.yaml

and the effect of the operation can be seen here.

Playbook 2 – installation services setup

ansible-playbook playbooks/02-setup-bastion-for-ocp-installation.yaml

We proceed to install and configure the bastion services responsible for automatically installing CoreOS on the nodes and preparing the bootstrap machine to run the OpenShift instance.

As part of the playbook operation on bastion we will deploy:

matchbox– responsible for the boot phase using PXE/iPXE and a simple HTTP server that makes CoreOS images availablednsmasq– providing DHCP, DNS and TFTP serviceshaproxy– load balancer for internal and external trafficimage registry– local image repository based on previously unpacked archives

After a while we should be ready to run the cluster installation and an example of how the playbook works can be found here.

Cluster boot phase

Bastion is now ready to install OpenShift. To start the process we need to bootstrap and cluster nodes.

A machine template running on a hypervisor or a physical machine without an operating system attempts to obtain an IP address via the DHCP service by default. This service on the bastion informs the client that the operating system will be installed over the network using the PXE/iPXE protocol and information about the location of operating system loading packets is exchanged.

In turn, the CoreOS installer is loaded into memory, which downloads the remaining configuration data and the OpenShift cluster initialization files (ignition files).

After a while all machines reboot with the operating system installed and bootstrap starts the Control Plane configuration phase.

Problems with proper installation of CoreOS are usually related to the following situations:

- The nodes are on a non-isolated DHCP network and another server is responding to the request. This manifests itself by assigning different IP addresses than those defined during configuration.

- Subnet broadcasts PXE/iPXE requests and another server, with higher priority, responds to the requests. This will be seen when trying to install a different operating system.

- The server does not receive an IP address. Probably has been entered the wrong MAC address in

init.shphase. - The boot procedure is aborted or frozen at one of the stages. This could be a network bootleneck issue. In this case it suggests booting the machines one by one instead of all at once.

Sometimes the first boot of a machine, especially in ESX environments, may show up with an improper initialization of the network interface. You should then shut down the machine and restart it.

The bootstrap phase along with the expected state of the nodes can be watched here.

Playbook 3 – OpenShift installation finalization

The OpenShift installation process has begun. Bootstrap will configure the master nodes on its own, but there are still a few tasks that need to be automated:

- accepting certificates for nodes outside the Control Plane

- adding the administrative account specified by the variable

GI_OCADMIN - in an offline environment changing the image content policy and consequently restarting all nodes in the cluster

- configuring storage for the cluster

- configuration of cluster image repository

The entire process of completing the installation is handled by command:

ansible-playbook playbooks/03-finish_ocp_install.sh

An example of execution is shown here.

Once complete, we will be able to log into a fully functional OCP cluster.

To log in to the cluster, the client machine must resolve two names correctly:

<bastion_ip> console-openshift-console.apps.<cluster_domain> oauth-openshift.apps.<cluster_domain>

This can be done in several ways:

- by adding them to the corporate DNS server

- by switching client station to DNS running on bastion

- adding proper definition to local resolver file.

Now we are ready to install applications on the top of OCP.

Playbook 4 – ICS installation

Guardium Insights uses IBM Common Services (ICS) and they need to be installed beforehand, also all Cloud Packs solutions are based on ICS. Hence, gi-runner also allows you to automate this process.

When installing in an offline environment, the container images needed to install ICS (including version selection) is included in the GI environment definition – in a so-called CASE file.

If we wanted to install a different version of ICS than the default for GI then this would require a separate process of downloading ICS using the prepare-air-gap-ics.sh script, installing ICS using it and then switching to install Guardium Insights. Perhaps in newer versions of GI there will be a mechanism to select the ICS version when installing offline and therefore gi-runner does not support the scenario described above.

Automating the ICS installation process requires additional environment variables and some decisions to be made during the init.sh script.

If we choose to install Guardium Insights the default values of the variables will be set automatically. If you decline the option to install GI you will be asked separately about installing ICS in standalone mode and then asked detailed questions about deployment.

An example of ICS configuration and installation can be followed here.

Environment variables related to IBM Common Services installation:

| Description | Environment variable | GI 3.0.0 and GI.3.0.1 default value |

| Will gi-runner automate ICS installation? | GI_ICS | Y |

ICS version to install define as integer value and corresponded to definition:1 - 3.7.1This list can be changed in time to support new versions of ICS | GI_ICS_VERSION | GI 3.0.0 - 3.7.4 |

Seven values (N or Y) in form of comma separated list.Meaning of values in order of appearance: position 1 equal Y, operand installed:– ibm-zen-operatorposition 2 equal Y, operands installed:– ibm-monitoring-exporters-operator– ibm-monitoring-prometheusext-operator– ibm-monitoring-grafana-operatorposition 3 equal Y, operand installed:– ibm-events-operatorposition 4 equal Y, operand installed:– ibm-auditlogging-operatorposition 5 equal Y, operand installed: – ibm-metering-operatorposition 6 equal Y, operand installed: – ibm-mongodb-operatorposition 7 equal Y, operand installed: – ibm-elastic-stack-operatorThese operands are installed always: – ibm-cert-manager-operator– ibm-iam-operator– ibm-healthcheck-operator– ibm-management-ingress-operator– ibm-licensing-operator– ibm-commonui-operator– ibm-ingress-nginx-operator (obsolete from 3.10+)– ibm-platform-api-operator | GI_ICS_OPERANDS | N,N,Y,Y,N,Y,N |

Size of ICS deployment where:S – smallM – mediumL – largegi-runner is created for GI installation automation of test environments. In this case ICS in small deployment is sufficient. If you would like to have ICS installed in HA architecture or support more that ten simultaneously ICS UI sessions change size to medium or large. | GI_ICS_SIZE | S |

| ICS admin account password | GI_ICSADMIN_PWD |

To install ICS start playbook:

ansible-playbook playbooks/04-install-ics.yaml

To log in to the ICS UI, the client machine must resolve correctly the name:

<bastion_ip> cp-console.apps.<cluster_domain>

Playbook 5 – GI installation

With ICS on board we can install Guardium Insights.

Automating the GI installation process requires additional environment variables and some decisions to be made during the init.sh script.

An example of GI configuration and installation can be seen here.

Environment variables related to Guardium Insights installation:

| Description | Environment variable | Value example |

Will gi-runner automate Guardium Insights installatio? | GI_INSTALL_GI | Y |

GI version to install:0 - 3.0.0 | GI_VERSION | 0 |

| Size of PVC for DB2 storage in GB (it should not be larger that 60% of OCS or rook-ceph total storage size) | GI_DATA_STORAGE_SIZE | 4000 |

GI deployment size:0 - values-poc-lite (good performance for small non-production tests)1 - values-dev (the smallest deplyment for test only)2 - values-small (small production deplyment) | GI_SIZE_GI | 0 |

| Name of OpenShift namespace where GI will be installed | GI_NAMESPACE_GI | gi3 |

| Comma sperated list of OCP nodes where DB2 will be installed. Each node must be worker and should have more CPU and RAM that other GI nodes. You can specify maximum two nodes, in this case DB2 will be deployed in HA cluster. | GI_DB2_NODES | m1 |

| Specifies whether the DB2 database in which events are stored should be encrypted. Disable option available since GI 3.0.1. | GI_DB2_ENCRYPTED | Y |

To install GI start playbook:

ansible-playbook playbooks/04-install-gi.yaml

To log in to the ICS UI, the client machine must resolve correctly the name:

<bastion_ip> insights.apps.<cluster_domain>

Playbook 50 – OpenLDAP on bastion

Guardium Insights externalizes user identities to external directory services. Therefore, gi-runner provides the ability to install an OpenLDAP instance on bastion if you do not have access to such services.

You can see how to use this functionality at this link.

If you install OpenLDAP, you will need to define some additional environment variables:

| Description | Environment variable | Value example |

| LDAP domain name in distinguished name (DN) format | GI_LDAP_DOMAIN | DC=guardium,DC=notes |

| Comma separated list of user account names to create | GI_LDAP_USERS | zibi,marco,ander |

| Password for LDAP users | GI_LDAP_USERS_PWD |

To install OpenLDAP start playbook:

ansible-playbook playbooks/50-set_configure_ldap.yaml

Summary

The mechanism presented above for automating the installation of OpenShift, IBM Common Services and Guardium Insights can be used in a more complex architecture and an example of such an application with dedicated nodes for OCS can be followed at this link.

I hope that gi-runner will make it easier and shorten the time required to test solutions running on the OpenShift platform.

I would like to thank Devan Shah, Joshua Ho, Omar Raza without whom the project would not have a chance to take its current form and Dale Brocklehurst for his successful attempts to test the untested 🙂